Server Outage Incident

Cloud service outage seems impossible, but I recently experienced one. To log this event is only to remind everyone to have some data security implemented, NOT intended to specify which ISP is better than the other.

Incident Timeline

Notes: All notifications below are directly from the ISP. The time zone is Mountain Standard Time (UTC-07:00).

Mar. 06, 2023

SJC is out of hardware resources.

The new SSD, RAM, and servers are on the way.

- Two nodes will be rebooted next weekend (March 17 or 19) for a RAM upgrade.

- The block storage will be doubled, and the snapshot will be available this weekend (March 10 or 12).

- Your VM might be cold-migrated (one reboot) without notice to a new node since tight hardware resources.

- The new instance purchase will be available the next day after the action (March 11 or 13.) if the no.2 action is completed on time.

Summary: They will upgrade the data center due to out-of-hardware resources. Your instance VM will be migrated and rebooted.

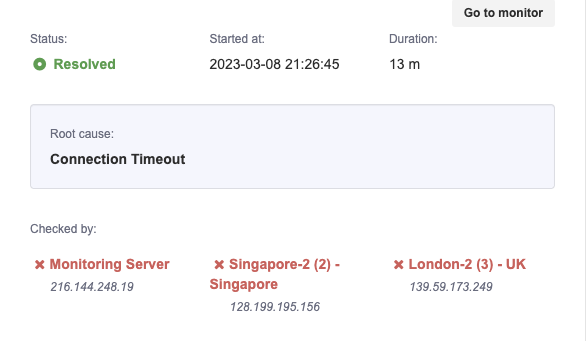

Mar. 08, 2023

We have to performe emergency reboot for some SJC node. It will be done very soon.

Summary: Some accidents happened, but they did not specify the reason. Meanwhile, the service was interrupted for around 13 minutes.

Mar. 09, 2023

01:03 PM

We've noticed IO Error in SJC; Investigating. Keep you posted.

01:10 PM

The network components we used implemented hash Layer3+4 for Bond interface, which is not supported by Infiniband.

It caused the disonnection-dead-loop for the entire SJC Ceph cluster.

We've removed the config implemented by component and locked it.

01:14 PM

We experience extramly high load in SJC, the new hardware is on the way;

The new NVMe block storage hardware will be installed tomorrow.

08:08 PM

We are working on restore Ceph-OSD; The problem is found; It still takes more time to recovery.

09:11 PM

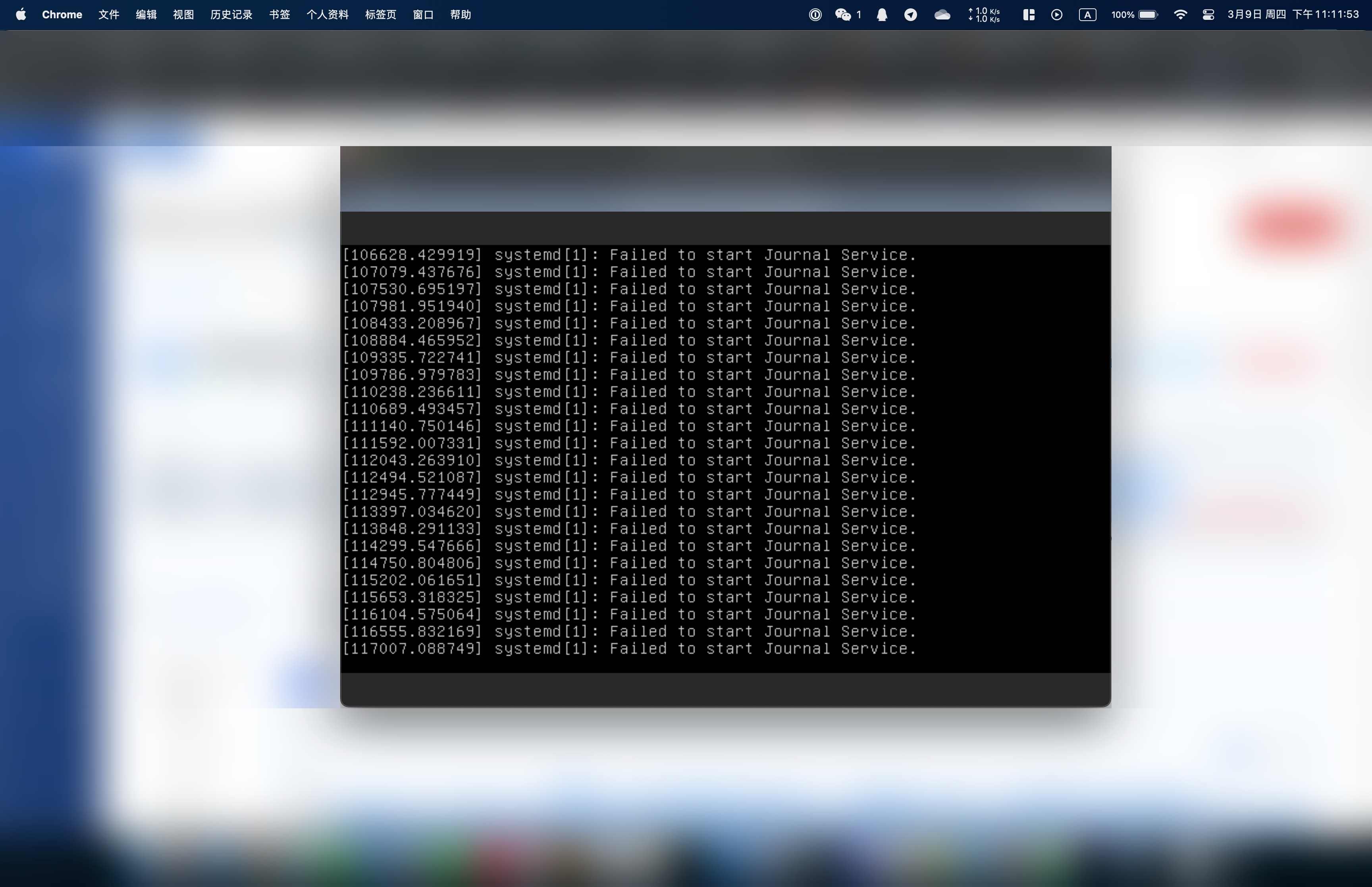

We are still working on it; we suggest do not reboot if you still able to run your system, since the I/O is currently suspended.

A screenshot from the console:

09:41 PM

The remote hand is on the way to the SJC location to implement hardware requirements for repair.

The SLA solution will be posted after repair done.

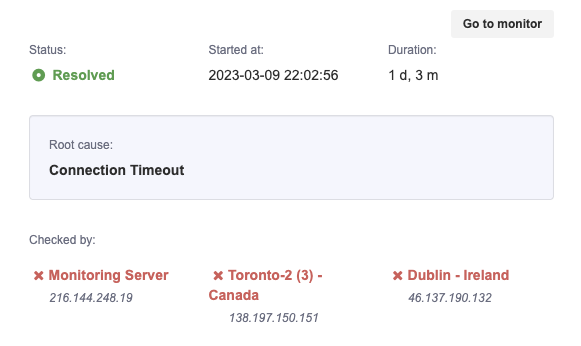

22:02 PM

Mar. 10, 2023

12:43 AM

OSD recovery, backfill in progress.

02:05 AM

Step 1 still need ~4hrs; 70% VM will return to normal;

Step 2 will take another ~4hrs; 99% VM will return to normal;

Step 3 needs whole day, it only leads to IO performance impact but not uptime impact.

The SLA is lower than TOS offered. The reimbursement will issue case by case; please submit ticket after the event end.

We are deeply sorry for the recent SLA drop that may have caused inconvenience to your business operations. We understand the importance of our services to your business and we take full responsibility for this interruption.

The fault report will be posted after the event.

07:25 AM

Ceph does not allow to run after partitial recovery; step 2 in process.

01:14 PM

Step 2 complete;

Due to one OSD failed to be recovery, and data difference during time; there are 13/512 (2.5390625%) data is unable to recovered.

Once again, we apologize for any inconvenience or concern that this may have caused. We value your trust and we will continue to work hard to earn and maintain it.

05:19 PM

[ISP offers a compensation package as a token of apology]

06:20 PM

The initial Summary:

~March 1

On or about March 1, [ISP Name] San Jose received a large number of VM orders. (almost double the number of VM at that time).

~March 3

[ISP Name] had noticed the tight resources and immediately stopped accepting new orders.

Memory resources were released to the two new nodes that were newly purchased last month.

The available storage resources were already lower than 30% at that time.

~March 6

On March 6, we increased the set-full-ratio of the OSD from 90% to 95% in order to prevent IO outages.

But this was still not enough to solve the problem, and we had ordered a enought amount of P5510 P5520 7.68TB on March 3.

FedEx expected to deliver on March 7, and we were scheduled to install these SSDs on March 8.

Due to the California weather, the delivery was delayed to March 9 and we planned to install the SSDs immediately on March 10 to relieve the pressure.

~March 8

On the night of March 8, we completed network maintenance, which caused the OSD to reboot.

Also due to OSD overload, BlueStore did not have enough space to allocate 4% log during start, resulting in OSD refusing to boot. This still only resulted in reduced IO performance.

~March 9

Due to the continued writes, on the morning of March 9, another OSD triggered a failure and caused backfill, which caused a chain reaction that resulted in a third OSD being written to full and then failing to start. This eventually led to current condition.

We immediately arranged to the on-site installation on March 9, but this still caused some PGs to be lost.

=== Tech Notes

- San Jose uses the latest tech stack of [ISP Name]. We do not know bluestore will use 4% of the total OSD as a log. We thought it should be included in the data.

Once the data uses all space, the log cannot be issued during initiating. It leads to failure. - San Jose does not have that much VM increase rate as before, the double order gave us limited time to upgrade.

=== Management Notes

- [ISP Name] will prepare to upgrade the locations once resources are over 60%.

- [ISP Name] will reject the order if we don't have the ability immediately to keep resources lower than 80%.

Summary: The whole data center lost around 2.54% data.

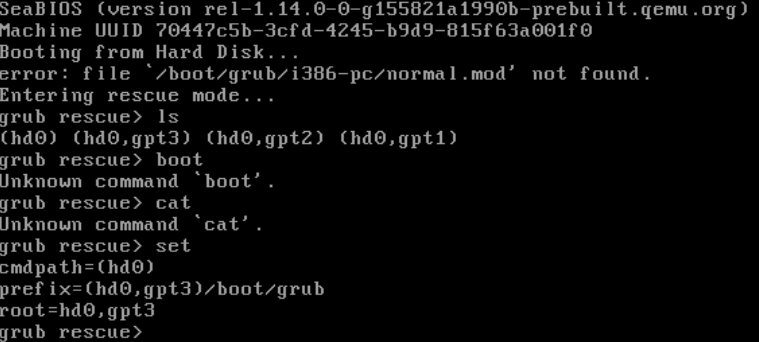

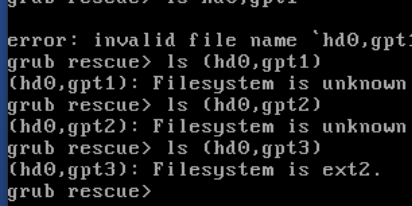

The console shows missing Linux bootable file:

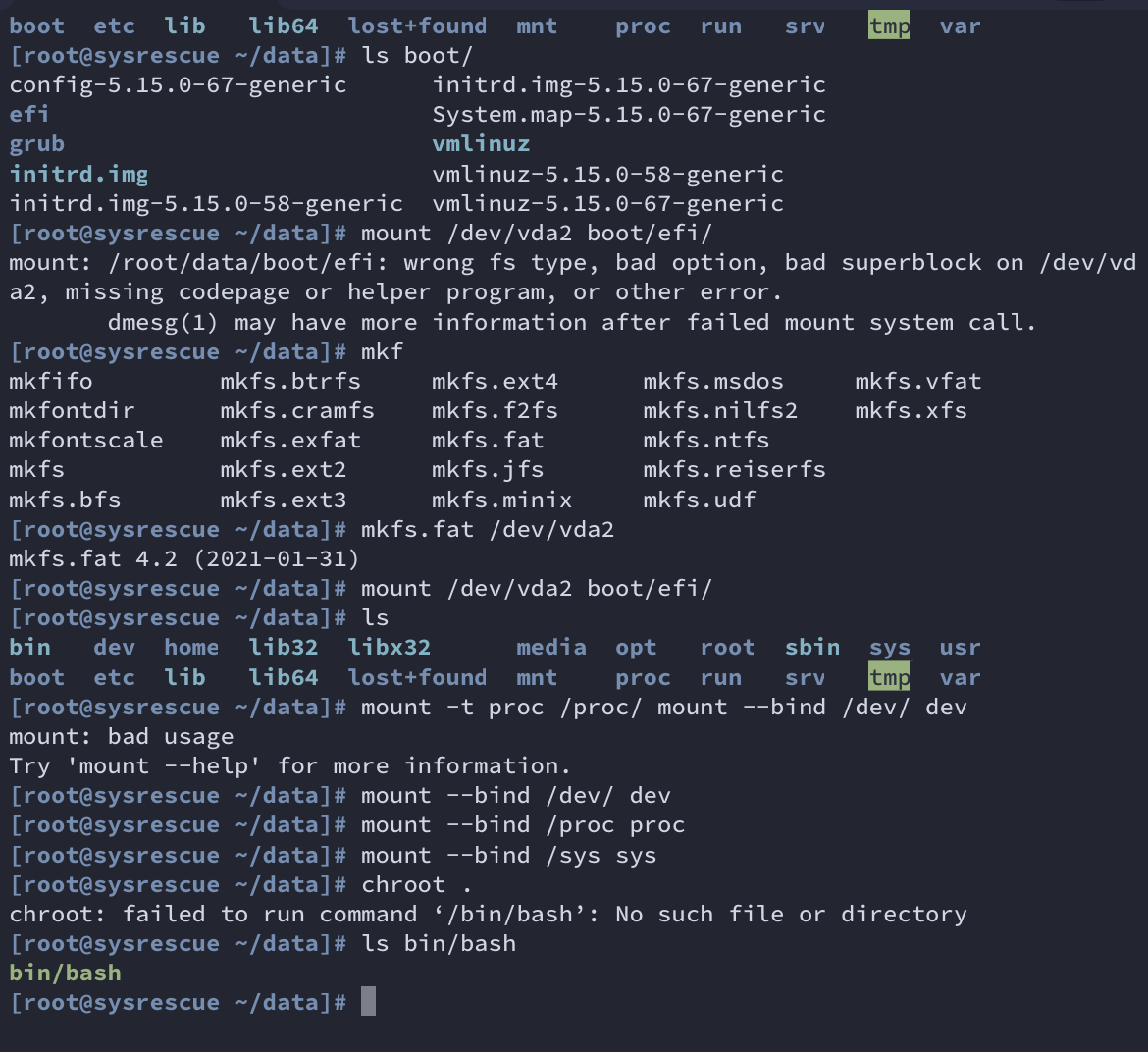

Try to fix it manually

Mar. 10, 2023

After a shallow investigation by another friend and me, the main partition was not damaged. However, the system core files were missing and could not start normally. It usually can be fixed by mounting the drive to another bootable environment (aka Rescue Mode), but the ISP control panel did not integrate this feature. Then, we submitted a ticket.

Mar. 12, 2023

Two days later, the technician notified me that the primary partition had been mounted to the new system and gave me login credentials. After inspection, the original system library was seriously damaged and almost impossible to repair. Lots of files are also lost randomly. The final solution is to copy out accessible data on the instance.

Re-build && Recover from backup

Mar. 10, 2023

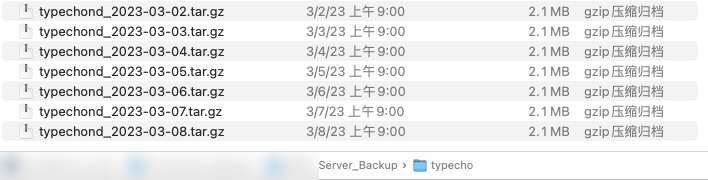

Immediately after confirming that the system was damaged, a new server was purchased from another vendor, and previous implementations were rebuilt from the latest backup. Since my friend and I had never experienced anything like this before, the automated script backed up only the core files before the server crashed. The rebuilding process was quite tedious.

Mar. 11, 2023

All services were back online.

Mar. 12, 2023

Improved all auto-backup scripts to ensure all workspaces will be backed-up.

Request Refund

Mar. 14, 2023

Since I hope to terminate the service with them anymore, I asked for the refund.

Conclusion

Lesson:

- Be sure to have a backup, and should include everything as completely as possible.

- Not all service providers have the ability/capital to do disaster recovery. Once the server in the data center fails, it is very likely to cause data loss.

Loss:

- A few non-essential Python scripts

- Approaching three days of spring break